Introduction

Reinforcement Learning (RL) is a cornerstone of artificial intelligence (AI), enabling systems to make decisions and optimize their performance through trial and error. By mimicking how humans and animals learn from their environment, RL has propelled AI into domains requiring adaptability, strategy, and autonomy. This blog post dives into the history, foundational concepts, key milestones, and the promising future of RL, offering readers a comprehensive understanding of its relevance in advancing AI.

What is Reinforcement Learning?

At its core, RL is a type of machine learning where an agent interacts with an environment, learns from the consequences of its actions, and strives to maximize cumulative rewards over time. Unlike supervised learning, where models are trained on labeled data, RL emphasizes learning through feedback in the form of rewards or penalties.

The process is typically defined by the Markov Decision Process (MDP), which comprises:

- States (S): The situations the agent encounters.

- Actions (A): The set of decisions available to the agent.

- Rewards (R): Feedback for the agent’s actions, guiding its learning process.

- Policy (π): A strategy mapping states to actions.

- Value Function (V): An estimate of future rewards from a given state.

The Origins of Reinforcement Learning

RL has its roots in psychology and neuroscience, inspired by behaviorist theories of learning and decision-making.

- Behavioral Psychology Foundations (1910s-1940s):

- Thorndike’s Law of Effect (1911): Edward Thorndike proposed that actions followed by favorable outcomes are likely to be repeated, laying the groundwork for reward-based learning.

- B.F. Skinner’s Operant Conditioning (1930s-40s): Skinner introduced reinforcement concepts using experiments with animals, showing how rewards shape behavior.

- Mathematical Foundations (1950s-1970s):

- Bellman’s Dynamic Programming (1957): Richard Bellman formalized decision-making in stochastic environments with the Bellman Equation, which became a cornerstone for RL algorithms.

- Temporal-Difference Learning (1970s): Concepts like Samuel’s Checkers-playing program (1959) and Sutton’s TD Learning (1988) bridged behaviorist ideas and computational methods.

Early Examples of Reinforcement Learning in AI

- Checkers-playing Program (1959):

- Arthur Samuel developed an RL-based program that learned to play checkers. By improving its strategy over time, it demonstrated early RL’s ability to handle complex decision spaces.

- TD-Gammon (1992):

- Gerald Tesauro’s backgammon program utilized temporal-difference learning to train itself. It achieved near-expert human performance, showcasing RL’s potential in real-world games.

- Robotics and Control (1980s-1990s):

- Early experiments applied RL to robotics, using frameworks like Q-learning (Watkins, 1989) to enable autonomous agents to navigate and optimize physical tasks.

Key Advances in Reinforcement Learning

- Q-Learning and SARSA (1990s):

- Q-Learning: Introduced by Chris Watkins, this model-free RL method allowed agents to learn optimal policies without prior knowledge of the environment.

- SARSA (State-Action-Reward-State-Action): A variation that emphasizes learning from the agent’s current policy, enabling safer exploration in certain settings.

- Deep Reinforcement Learning (2010s):

- The integration of RL with deep learning (e.g., Deep Q-Networks by DeepMind in 2013) revolutionized the field. This approach allowed RL to scale to high-dimensional spaces, such as those found in video games and robotics.

- Policy Gradient Methods:

- These methods, including Proximal Policy Optimization (PPO) and Trust Region Policy Optimization (TRPO), improved RL’s ability to handle continuous action spaces and stabilize training.

- AlphaGo and AlphaZero (2016-2018):

- DeepMind’s AlphaGo combined RL with Monte Carlo Tree Search to defeat human champions in Go, a game previously considered too complex for AI. AlphaZero further refined this by mastering chess, shogi, and Go with no prior human input, relying solely on RL.

Current Applications of Reinforcement Learning

- Robotics:

- RL trains robots to perform complex tasks like assembly, navigation, and manipulation in dynamic environments. Frameworks like OpenAI’s Dactyl use RL to achieve dexterous object manipulation.

- Autonomous Vehicles:

- RL powers decision-making in self-driving cars, optimizing routes, collision avoidance, and adaptive traffic responses.

- Healthcare:

- RL assists in personalized treatment planning, drug discovery, and adaptive medical imaging, leveraging its capacity for optimization in complex decision spaces.

- Finance:

- RL is employed in portfolio management, trading strategies, and risk assessment, adapting to volatile markets in real time.

The Future of Reinforcement Learning

- Scaling RL in Multi-Agent Systems:

- Collaborative and competitive multi-agent RL systems are being developed for applications like autonomous swarms, smart grids, and game theory.

- Sim-to-Real Transfer:

- Bridging the gap between simulated environments and real-world applications is a priority, enabling RL-trained agents to generalize effectively.

- Explainable Reinforcement Learning (XRL):

- As RL systems become more complex, improving their interpretability will be crucial for trust, safety, and ethical compliance.

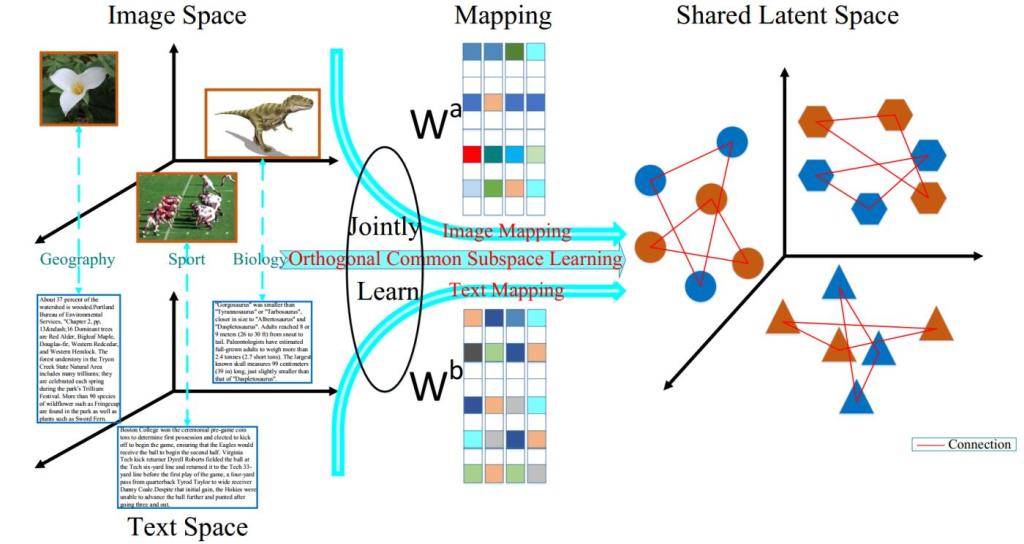

- Integrating RL with Other AI Paradigms:

- Hybrid systems combining RL with supervised and unsupervised learning promise greater adaptability and scalability.

Reinforcement Learning: Why It Matters

Reinforcement Learning remains one of AI’s most versatile and impactful branches. Its ability to solve dynamic, high-stakes problems has proven essential in domains ranging from entertainment to life-saving applications. The continuous evolution of RL methods, combined with advances in computational power and data availability, ensures its central role in the pursuit of artificial general intelligence (AGI).

By understanding its history, principles, and applications, professionals and enthusiasts alike can appreciate the transformative potential of RL and its contributions to the broader AI landscape.

As RL progresses, it invites us to explore the boundaries of what machines can achieve, urging researchers, developers, and policymakers to collaborate in shaping a future where intelligent systems serve humanity’s best interests.

Our next post will dive a bit deeper into this topic, and please let us know if there is anything you would like us to cover for clarity.

Follow DTT Podcasts on (Spotify)