1. What Do We Mean by “Novel Insights”?

“Novel insight” is a discrete, verifiable piece of knowledge that did not exist in a source corpus, is non-obvious to domain experts, and can be traced to a reproducible reasoning path. Think of a fresh scientific hypothesis, a new materials formulation, or a previously unseen cybersecurity attack graph.

Sam Altman’s recent prediction that frontier models will “figure out novel insights” by 2026 pushed the term into mainstream AI discourse. techcrunch.com

Classical machine-learning systems mostly rediscovered patterns humans had already encoded in data. The next wave promises something different: agentic, multi-modal models that autonomously traverse vast knowledge spaces, test hypotheses in simulation, and surface conclusions researchers never explicitly requested.

2. Why 2026 Looks Like a Tipping Point

| Catalyst | 2025 Status | What Changes by 2026 |

|---|---|---|

| Compute economics | NVIDIA Blackwell Ultra GPUs ship late-2025 | First Vera Rubin GPUs deliver a new memory stack and an order-of-magnitude jump in energy-efficient flops, slashing simulation costs. 9meters.com |

| Regulatory clarity | Fragmented global rules | EU AI Act becomes fully applicable on 2 Aug 2026, giving enterprises a common governance playbook for “high-risk” and “general-purpose” AI. artificialintelligenceact.eutranscend.io |

| Infrastructure scale-out | Regional GPU scarcity | EU super-clusters add >3,000 exa-flops of Blackwell compute, matching U.S. hyperscale capacity. investor.nvidia.com |

| Frontier model maturity | GPT-4.o, Claude-4, Gemini 2.5 | GPT-4.1, Gemini 1M, and Claude multi-agent stacks mature, validated on year-long pilots. openai.comtheverge.comai.google.dev |

| Commercial proof points | Early AI agents in consumer apps | Meta, Amazon and Booking show revenue lift from production “agentic” systems that plan, decide and transact. investors.com |

The convergence of cheaper compute, clearer rules, and proven business value explains why investors and labs are anchoring roadmaps on 2026.

3. Key Technical Drivers Behind Novel-Insight AI

3.1 Exascale & Purpose-Built Silicon

Blackwell Ultra and its 2026 successor, Vera Rubin, plus a wave of domain-specific inference ASICs detailed by IDTechEx, bring training cost curves down by ~70 %. 9meters.comidtechex.com This makes it economically viable to run thousands of concurrent experiment loops—essential for insight discovery.

3.2 Million-Token Context Windows

OpenAI’s GPT-4.1, Google’s Gemini long-context API and Anthropic’s Claude roadmap already process up to 1 million tokens, allowing entire codebases, drug libraries or legal archives to sit in a single prompt. openai.comtheverge.comai.google.dev Long context lets models cross-link distant facts without lossy retrieval pipelines.

3.3 Agentic Architectures

Instead of one monolithic model, “agents that call agents” decompose a problem into planning, tool-use and verification sub-systems. WisdomTree’s analysis pegs structured‐task automation (research, purchasing, logistics) as the first commercial beachhead. wisdomtree.com Early winners (Meta’s assistant, Amazon’s Rufus, Booking’s Trip Planner) show how agents convert insight into direct action. investors.com Engineering blogs from Anthropic detail multi-agent orchestration patterns and their scaling lessons. anthropic.com

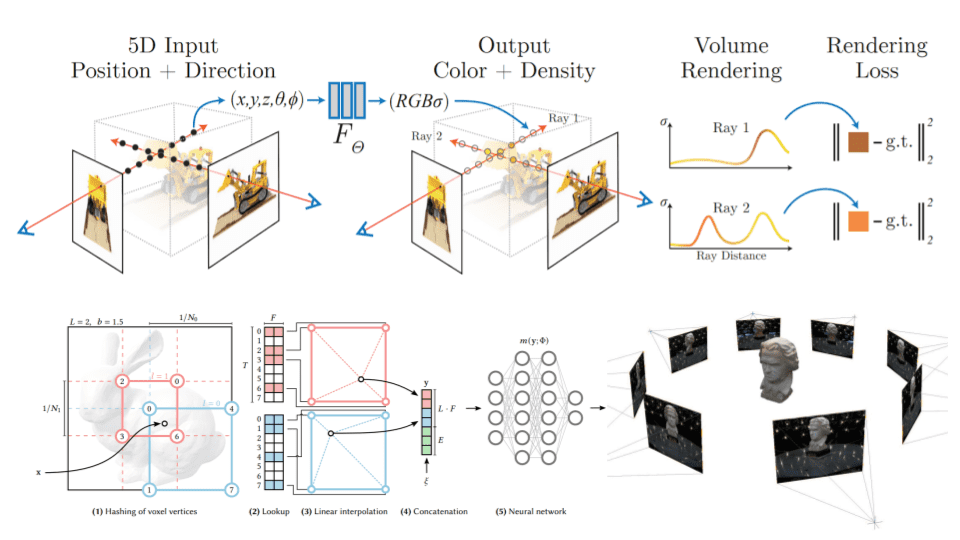

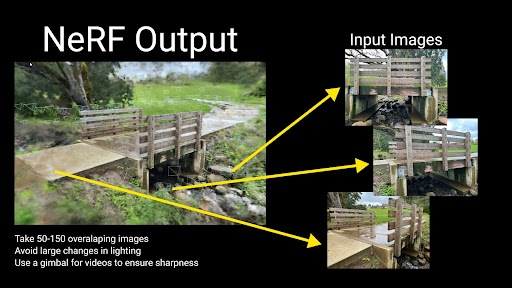

3.4 Multi-Modal Simulation & Digital Twins

Google’s Gemini 2.5 1 M-token window was designed for “complex multimodal workflows,” combining video, CAD, sensor feeds and text. codingscape.com When paired with physics-based digital twins running on exascale clusters, models can explore design spaces millions of times faster than human R&D cycles.

3.5 Open Toolchains & Fine-Tuning APIs

OpenAI’s o3/o4-mini and similar lightweight models provide affordable, enterprise-grade reasoning endpoints, encouraging experimentation outside Big Tech. openai.com Expect a Cambrian explosion of vertical fine-tunes—climate science, battery chemistry, synthetic biology—feeding the insight engine.

Why do These “Key Technical Drivers” Matter

- It Connects Vision to Feasibility

Predictions that AI will start producing genuinely new knowledge in 2026 sound bold. The driver section shows how that outcome becomes technically and economically possible—linking the high-level story to concrete enablers like exascale GPUs, million-token context windows, and agent-orchestration frameworks. Without these specifics the argument would read as hype; with them, it becomes a plausible roadmap grounded in hardware release cycles, API capabilities, and regulatory milestones. - It Highlights the Dependencies You Must Track

For strategists, each driver is an external variable that can accelerate or delay the insight wave:- Compute economics – If Vera Rubin-class silicon slips a year, R&D loops stay pricey and insight generation stalls.

- Million-token windows – If long-context models prove unreliable, enterprises will keep falling back on brittle retrieval pipelines.

- Agentic architectures – If tool-calling agents remain flaky, “autonomous research” won’t scale.

Understanding these dependencies lets executives time investment and risk-mitigation plans instead of reacting to surprises.

- It Provides a Diagnostic Checklist for Readiness

Each technical pillar maps to an internal capability question:

| Driver | Readiness Question | Illustrative Example |

| Exascale & purpose-built silicon | Do we have budgeted access to ≥10× current GPU capacity by 2026? | A pharma firm booking time on an EU super-cluster for nightly molecule screens. |

| Million-token context | Is our data governance clean enough to drop entire legal archives or codebases into a prompt? | A bank ingesting five years of board minutes and compliance memos in one shot to surface conflicting directives. |

| Agentic orchestration | Do we have sandboxed APIs and audit trails so AI agents can safely purchase cloud resources or file Jira tickets? | A telco’s provisioning bot ordering spare parts and scheduling field techs without human hand-offs. |

| Multimodal simulation | Are our CAD, sensor, and process-control systems emitting digital-twin-ready data? | An auto OEM feeding crash-test videos, LIDAR, and material specs into a single Gemini 1 M prompt to iterate chassis designs overnight. |

- It Frames the Business Impact in Concrete Terms

By tying each driver to an operational use case, you can move from abstract optimism to line-item benefits: faster time-to-market, smaller R&D head-counts, dynamic pricing, or real-time policy simulation. Stakeholders outside the AI team—finance, ops, legal—can see exactly which technological leaps translate into revenue, cost, or compliance gains. - It Clarifies the Risk Surface

Each enabler introduces new exposures:- Long-context models can leak sensitive data.

- Agent swarms can act unpredictably without robust verification loops.

- Domain-specific ASICs create vendor lock-in and supply-chain risk.

Surfacing these risks early triggers the governance, MLOps, and policy work streams that must run in parallel with technical adoption.

Bottom line: The “Key Technical Drivers Behind Novel-Insight AI” section is the connective tissue between a compelling future narrative and the day-to-day decisions that make—or break—it. Treat it as both a checklist for organizational readiness and a scorecard you can revisit each quarter to see whether 2026’s insight inflection is still on track.

4. How Daily Life Could Change

- Workplace: Analysts get “co-researchers” that surface contrarian theses, legal teams receive draft arguments built from entire case-law corpora, and design engineers iterate devices overnight in generative CAD.

- Consumer: Travel bookings shift from picking flights to approving an AI-composed itinerary (already live in Booking’s Trip Planner). investors.com

- Science & Medicine: AI proposes unfamiliar protein folds or composite materials; human labs validate the top 1 %.

- Public Services: Cities run continuous scenario planning—traffic, emissions, emergency response—adjusting policy weekly instead of yearly.

5. Pros and Cons of the Novel-Insight Era

| Upside | Trade-offs |

|---|---|

| Accelerated discovery cycles—months to days | Verification debt: spurious but plausible insights can slip through (90 % of agent projects may still fail). medium.com |

| Democratized expertise; SMEs gain research leverage | Intellectual-property ambiguity over machine-generated inventions |

| Productivity boosts comparable to prior industrial revolutions | Job displacement in rote analysis and junior research roles |

| Rapid response to global challenges (climate, pandemics) | Concentration of compute and data advantages in a few regions |

| Regulatory frameworks (EU AI Act) enforce transparency | Compliance cost may slow open-source and startups |

6. Conclusion — 2026 Is Close, but Not Inevitable

Hardware roadmaps, policy milestones and commercial traction make 2026 a credible milestone for AI systems that surprise their creators. Yet the transition hinges on disciplined evaluation pipelines, open verification standards, and cross-disciplinary collaboration. Leaders who invest this year—in long-context tooling, agent orchestration, and robust governance—will be best positioned when the first genuinely novel insights start landing in their inbox.

Ready or not, the era when AI produces first-of-its-kind knowledge is approaching. The question for strategists isn’t if but how your organization will absorb, vet and leverage those insights—before your competitors do.

Follow us on (Spotify) as we discuss this, and other topics.