Artificial General Intelligence (AGI) is one of the most discussed, and polarizing, frontiers in the technology world. Unlike narrow AI, which excels in specific domains, AGI is expected to demonstrate human-level or beyond-human intelligence across a wide range of tasks. But the questions remain: When will AGI arrive? Will it arrive at all? And if it does, what will it mean for humanity?

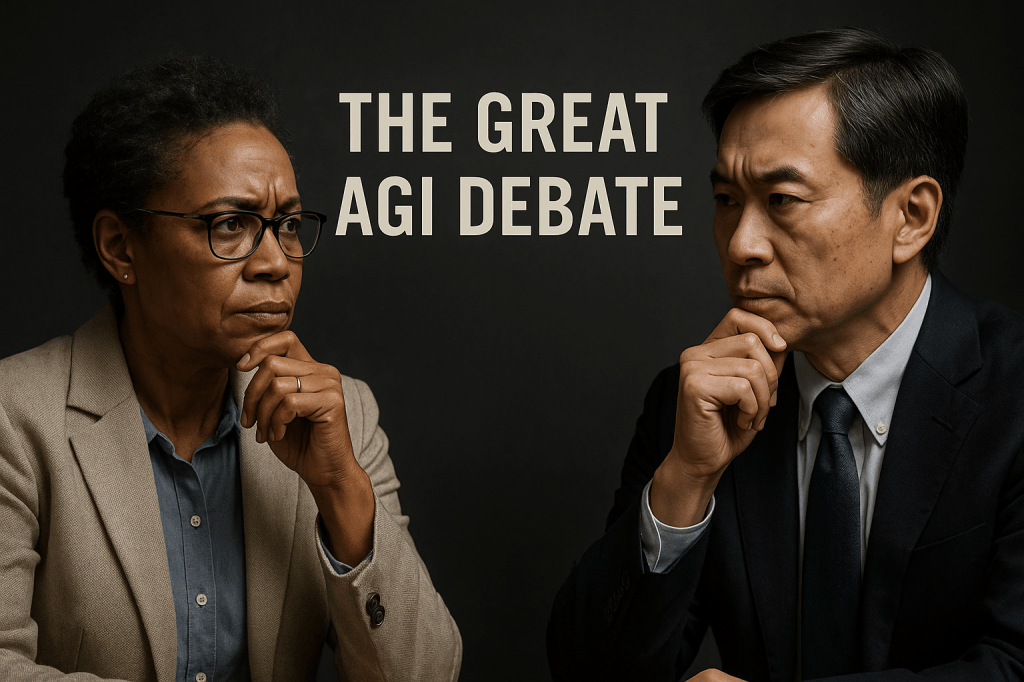

To explore these questions, we bring together two distinguished voices in AI:

- Dr. Evelyn Carter — Computer Scientist, AGI optimist, and advisor to multiple frontier AI labs.

- Dr. Marcus Liang — Philosopher of Technology, AI skeptic, and researcher on alignment, ethics, and systemic risks.

What follows is their debate — a rigorous, professional dialogue about the path toward AGI, the hurdles that remain, and the potential futures that could unfold.

Opening Positions

Dr. Carter (Optimist):

AGI is not a distant dream; it’s an approaching reality. The pace of progress in scaling large models, combining them with reasoning frameworks, and embedding them into multi-agent systems is exponential. Within the next decade, possibly as soon as the early 2030s, we will see systems that can perform at or above human levels across most intellectual domains. The signals are here: agentic AI, retrieval-augmented reasoning, robotics integration, and self-improving architectures.

Dr. Liang (Skeptic):

While I admire the ambition, I believe AGI is much further off — if it ever comes. Intelligence isn’t just scaling more parameters or adding memory modules; it’s an emergent property of embodied, socially-embedded beings. We’re still struggling with hallucinations, brittle reasoning, and value alignment in today’s large models. Without breakthroughs in cognition, interpretability, and real-world grounding, talk of AGI within a decade is premature. The possibility exists, but the timeline is longer — perhaps multiple decades, if at all.

When Will AGI Arrive?

Dr. Carter:

Look at the trends: in 2017 we got transformers, by 2020 models surpassed most natural language benchmarks, and by 2025 frontier labs are producing models that rival experts in law, medicine, and strategy games. Progress is compressing timelines. The “emergence curve” suggests capabilities appear unpredictably once systems hit a critical scale. If Moore’s Law analogs in AI hardware (e.g., neuromorphic chips, photonic computing) continue, the computational threshold for AGI could be reached soon.

Dr. Liang:

Extrapolation is dangerous. Yes, benchmarks fall quickly, but benchmarks are not reality. The leap from narrow competence to generalized understanding is vast. We don’t yet know what cognitive architecture underpins generality. Biological brains integrate perception, motor skills, memory, abstraction, and emotions seamlessly — something no current model approaches. Predicting AGI by scaling current methods risks mistaking “more of the same” for “qualitatively new.” My forecast: not before 2050, if ever.

How Will AGI Emerge?

Dr. Carter:

Through integration, not isolation. AGI won’t be one giant model; it will be an ecosystem. Large reasoning engines combined with specialized expert systems, embodied in robots, augmented by sensors, and orchestrated by agentic frameworks. The result will look less like a single “brain” and more like a network of capabilities that together achieve general intelligence. Already we see early versions of this in autonomous AI agents that can plan, execute, and reflect.

Dr. Liang:

That integration is precisely what makes it fragile. Stitching narrow intelligences together doesn’t equal generality — it creates complexity, and complexity brings brittleness. Moreover, real AGI will need grounding: an understanding of the physical world through interaction, not just prediction of tokens. That means robotics, embodied cognition, and a leap in common-sense reasoning. Until AI can reliably reason about a kitchen, a factory floor, or a social situation without contradiction, we’re still far away.

Why Will AGI Be Pursued Relentlessly?

Dr. Carter:

The incentives are overwhelming. Nations see AGI as strategic leverage — the next nuclear or internet-level technology. Corporations see trillions in value across automation, drug discovery, defense, finance, and creative industries. Human curiosity alone would drive it forward, even without profit motives. The trajectory is irreversible; too many actors are racing for the same prize.

Dr. Liang:

I agree it will be pursued — but pursuit doesn’t guarantee delivery. Fusion energy has been pursued for 70 years. A breakthrough might be elusive or even impossible. Human-level intelligence might be tied to evolutionary quirks we can’t replicate in silicon. Without breakthroughs in alignment and interpretability, governments may even slow progress, fearing uncontrolled systems. So relentless pursuit could just as easily lead to regulatory walls, moratoriums, or even technological stagnation.

What If AGI Never Arrives?

Dr. Carter:

If AGI never arrives, humanity will still benefit enormously from “AI++” — systems that, while not fully general, dramatically expand human capability in every domain. Think of advanced copilots in science, medicine, and governance. The absence of AGI doesn’t equal stagnation; it simply means augmentation, not replacement, of human intelligence.

Dr. Liang:

And perhaps that’s the more sustainable outcome. A world of near-AGI systems might avoid existential risk while still transforming productivity. But if AGI is impossible under current paradigms, we’ll need to rethink research from first principles: exploring neuromorphic computing, hybrid symbolic-neural models, or even quantum cognition. The field might fracture — some chasing AGI, others perfecting narrow AI that enriches society.

Obstacles on the Path

Shared Viewpoints: Both experts agree on the hurdles:

- Alignment: Ensuring goals align with human values.

- Interpretability: Understanding what the model “knows.”

- Robustness: Reducing brittleness in real-world environments.

- Computation & Energy: Overcoming resource bottlenecks.

- Governance: Navigating geopolitical competition and regulation.

Dr. Carter frames these as solvable engineering challenges. Dr. Liang frames them as existential roadblocks.

Closing Statements

Dr. Carter:

AGI is within reach — not inevitable, but highly probable. Expect it in the next decade or two. Prepare for disruption, opportunity, and the redefinition of work, governance, and even identity.

Dr. Liang:

AGI may be possible, but expecting it soon is wishful. Until we crack the mysteries of cognition and grounding, it remains speculative. The wise path is to build responsibly, prioritize alignment, and avoid over-promising. The future might be transformed by AI — but perhaps not in the way “AGI” narratives assume.

Takeaways to Consider

- Timelines diverge widely: Optimists say 2030s, skeptics say post-2050 (if at all).

- Pathways differ: One predicts integrated multi-agent systems, the other insists on embodied, grounded cognition.

- Obstacles are real: Alignment, interpretability, and robustness remain unsolved.

- Even without AGI: Near-AGI systems will still reshape industries and society.

👉 The debate is not about if AGI matters — it’s about when and whether it is possible. As readers of this debate, the best preparation lies in learning, adapting, and engaging with these questions now, before answers arrive in practice rather than in theory.

We also discuss this topic on (Spotify)