1. Why “AI Reasoning” Is Suddenly The Hot Topic

The 2025 Stanford AI Index calls out complex reasoning as the last stubborn bottleneck even as models master coding, vision and natural language tasks — and reminds us that benchmark gains flatten as soon as true logical generalization is required.hai.stanford.edu

At the same time, frontier labs now market specialized reasoning models (OpenAI o-series, Gemini 2.5, Claude Opus 4), each claiming new state-of-the-art scores on math, science and multi-step planning tasks.blog.googleopenai.comanthropic.com

2. So, What Exactly Is AI Reasoning?

At its core, AI reasoning is the capacity of a model to form intermediate representations that support deduction, induction and abduction, not merely next-token prediction. DeepMind’s Gemini blog phrases it as the ability to “analyze information, draw logical conclusions, incorporate context and nuance, and make informed decisions.”blog.google

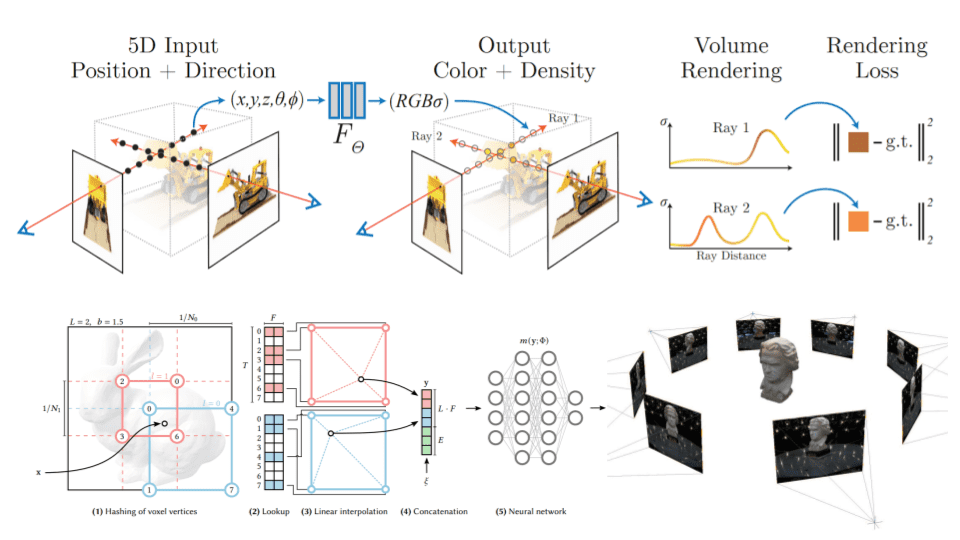

Early LLMs approximated reasoning through Chain-of-Thought (CoT) prompting, but CoT leans on incidental pattern-matching and breaks when steps must be verified. Recent literature contrasts these prompt tricks with explicitly architected reasoning systems that self-correct, search, vote or call external tools.medium.com

Concrete Snapshots of AI Reasoning in Action (2023 – 2025)

Below are seven recent systems or methods that make the abstract idea of “AI reasoning” tangible. Each one embodies a different flavor of reasoning—deduction, planning, tool-use, neuro-symbolic fusion, or strategic social inference.

| # | System / Paper | Core Reasoning Modality | Why It Matters Now |

|---|---|---|---|

| 1 | AlphaGeometry (DeepMind, Jan 2024) | Deductive, neuro-symbolic – a language model proposes candidate geometric constructs; a symbolic prover rigorously fills in the proof steps. | Solved 25 of 30 International Mathematical Olympiad geometry problems within the contest time-limit, matching human gold-medal capacity and showing how LLM “intuition” + logic engines can yield verifiable proofs. deepmind.google |

| 2 | Gemini 2.5 Pro (“thinking” model, Mar 2025) | Process-based self-reflection – the model produces long internal traces before answering. | Without expensive majority-vote tricks, it tops graduate-level benchmarks such as GPQA and AIME 2025, illustrating that deliberate internal rollouts—not just bigger parameters—boost reasoning depth. blog.google |

| 3 | ARC-AGI-2 Benchmark (Mar 2025) | General fluid intelligence test – puzzles easy for humans, still hard for AIs. | Pure LLMs score 0 – 4 %; even OpenAI’s o-series with search nets < 15 % at high compute. The gap clarifies what isn’t solved and anchors research on genuinely novel reasoning techniques. arcprize.org |

| 4 | Tree-of-Thought (ToT) Prompting (2023, NeurIPS) | Search over reasoning paths – explores multiple partial “thoughts,” backtracks, and self-evaluates. | Raised GPT-4’s success on the Game-of-24 puzzle from 4 % → 74 %, proving that structured exploration outperforms linear Chain-of-Thought when intermediate decisions interact. arxiv.org |

| 5 | ReAct Framework (ICLR 2023) | Reason + Act loops – interleaves natural-language reasoning with external API calls. | On HotpotQA and Fever, ReAct cuts hallucinations by actively fetching evidence; on ALFWorld/WebShop it beats RL agents by +34 % / +10 % success, showing how tool-augmented reasoning becomes practical software engineering. arxiv.org |

| 6 | Cicero (Meta FAIR, Science 2022) | Social & strategic reasoning – blends a dialogue LM with a look-ahead planner that models other agents’ beliefs. | Achieved top-10 % ranking across 40 online Diplomacy games by planning alliances, negotiating in natural language, and updating its strategy when partners betrayed deals—reasoning that extends beyond pure logic into theory-of-mind. noambrown.github.io |

| 7 | PaLM-SayCan (Google Robotics, updated Aug 2024) | Grounded causal reasoning – an LLM decomposes a high-level instruction while a value-function checks which sub-skills are feasible in the robot’s current state. | With the upgraded PaLM backbone it executes 74 % of 101 real-world kitchen tasks (up +13 pp), demonstrating that reasoning must mesh with physical affordances, not just text. say-can.github.io |

Key Take-aways

- Reasoning is multi-modal.

Deduction (AlphaGeometry), deliberative search (ToT), embodied planning (PaLM-SayCan) and strategic social inference (Cicero) are all legitimate forms of reasoning. Treating “reasoning” as a single scalar misses these nuances. - Architecture beats scale—sometimes.

Gemini 2.5’s improvements come from a process model training recipe; ToT succeeds by changing inference strategy; AlphaGeometry succeeds via neuro-symbolic fusion. Each shows that clever structure can trump brute-force parameter growth. - Benchmarks like ARC-AGI-2 keep us honest.

They remind the field that next-token prediction tricks plateau on tasks that require abstract causal concepts or out-of-distribution generalization. - Tool use is the bridge to the real world.

ReAct and PaLM-SayCan illustrate that reasoning models must call calculators, databases, or actuators—and verify outputs—to be robust in production settings. - Human factors matter.

Cicero’s success (and occasional deception) underscores that advanced reasoning agents must incorporate explicit models of beliefs, trust and incentives—a fertile ground for ethics and governance research.

3. Why It Works Now

- Process- or “Thinking” Models. OpenAI o3, Gemini 2.5 Pro and similar models train a dedicated process network that generates long internal traces before emitting an answer, effectively giving the network “time to think.”blog.googleopenai.com

- Massive, Cheaper Compute. Inference cost for GPT-3.5-level performance has fallen ~280× since 2022, letting practitioners afford multi-sample reasoning strategies such as majority-vote or tree-search.hai.stanford.edu

- Tool Use & APIs. Modern APIs expose structured tool-calling, background mode and long-running jobs; OpenAI’s GPT-4.1 guide shows a 20 % SWE-bench gain just by integrating tool-use reminders.cookbook.openai.com

- Hybrid (Neuro-Symbolic) Methods. Fresh neurosymbolic pipelines fuse neural perception with SMT solvers, scene-graphs or program synthesis to attack out-of-distribution logic puzzles. (See recent survey papers and the surge of ARC-AGI solvers.)arcprize.org

4. Where the Bar Sits Today

| Capability | Frontier Performance (mid-2025) | Caveats |

|---|---|---|

| ARC-AGI-1 (general puzzles) | ~76 % with OpenAI o3-low at very high test-time compute | Pareto trade-off between accuracy & $$$ arcprize.org |

| ARC-AGI-2 | < 9 % across all labs | Still “unsolved”; new ideas needed arcprize.org |

| GPQA (grad-level physics Q&A) | Gemini 2.5 Pro #1 without voting | Requires million-token context windows blog.google |

| SWE-bench Verified (code repair) | 63 % with Gemini 2.5 agent; 55 % with GPT-4.1 agentic harness | Needs bespoke scaffolds and rigorous evals blog.googlecookbook.openai.com |

Limitations to watch

- Cost & Latency. Step-sampling, self-reflection and consensus raise latency by up to 20× and inflate bill-rates — a point even Business Insider flags when cheaper DeepSeek releases can’t grab headlines.businessinsider.com

- Brittleness Off-Distribution. ARC-AGI-2’s single-digit scores illustrate how models still over-fit to benchmark styles.arcprize.org

- Explainability & Safety. Longer chains can amplify hallucinations if no verifier model checks each step; agents that call external tools need robust sandboxing and audit trails.

5. Practical Take-Aways for Aspiring Professionals

| Pillar | What to Master | Why It Matters |

|---|---|---|

| Prompt & Agent Design | CoT, ReAct, Tree-of-Thought, tool schemas, background execution modes | Unlock double-digit accuracy gains on reasoning tasks cookbook.openai.com |

| Neuro-Symbolic Tooling | LangChain Expressions, Llama-Index routers, program-synthesis libraries, SAT/SMT interfaces | Combine neural intuition with symbolic guarantees for safety-critical workflows |

| Evaluation Discipline | Benchmarks (ARC-AGI, PlanBench, SWE-bench), custom unit tests, cost-vs-accuracy curves | Reasoning quality is multidimensional; naked accuracy is marketing, not science arcprize.org |

| Systems & MLOps | Distributed tracing, vector-store caching, GPU/TPU economics, streaming APIs | Reasoning models are compute-hungry; efficiency is a feature hai.stanford.edu |

| Governance & Ethics | Alignment taxonomies, red-team playbooks, policy awareness (e.g., SB-1047 debates) | Long-running autonomous agents raise fresh safety and compliance questions |

6. The Road Ahead—Deepening the Why, Where, and ROI of AI Reasoning

1 | Why Enterprises Cannot Afford to Ignore Reasoning Systems

- From task automation to orchestration. McKinsey’s 2025 workplace report tracks a sharp pivot from “autocomplete” chatbots to autonomous agents that can chat with a customer, verify fraud, arrange shipment and close the ticket in a single run. The differentiator is multi-step reasoning, not bigger language models.mckinsey.com

- Reliability, compliance, and trust. Hallucinations that were tolerable in marketing copy are unacceptable when models summarize contracts or prescribe process controls. Deliberate reasoning—often coupled with verifier loops—cuts error rates on complex extraction tasks by > 90 %, according to Google’s Gemini 2.5 enterprise pilots.cloud.google.com

- Economic leverage. Vertex AI customers report that Gemini 2.5 Flash executes “think-and-check” traces 25 % faster and up to 85 % cheaper than earlier models, making high-quality reasoning economically viable at scale.cloud.google.com

- Strategic defensibility. Benchmarks such as ARC-AGI-2 expose capability gaps that pure scale will not close; organizations that master hybrid (neuro-symbolic, tool-augmented) approaches build moats that are harder to copy than fine-tuning another LLM.arcprize.org

2 | Where AI Reasoning Is Already Flourishing

| Ecosystem | Evidence of Momentum | What to Watch Next |

|---|---|---|

| Retail & Supply Chain | Target, Walmart and Home Depot now run AI-driven inventory ledgers that issue billions of demand-supply predictions weekly, slashing out-of-stocks.businessinsider.com | Autonomous reorder loops with real-time macro-trend ingestion (EY & Pluto7 pilots).ey.compluto7.com |

| Software Engineering | Developer-facing agents boost productivity ~30 % by generating functional code, mapping legacy business logic and handling ops tickets.timesofindia.indiatimes.com | “Inner-loop” reasoning: agents that propose and formally verify patches before opening pull requests. |

| Legal & Compliance | Reasoning models now hit 90 %+ clause-interpretation accuracy and auto-triage mass-tort claims with traceable justifications, shrinking review time by weeks.cloud.google.compatterndata.aiedrm.net | Court systems are drafting usage rules after high-profile hallucination cases—firms that can prove veracity will win market share.theguardian.com |

| Advanced Analytics on Cloud Platforms | Gemini 2.5 Pro on Vertex AI, OpenAI o-series agents on Azure, and open-source ARC Prize entrants provide managed “reasoning as a service,” accelerating adoption beyond Big Tech.blog.googlecloud.google.comarcprize.org | Industry-specific agent bundles (finance, life-sciences, energy) tuned for regulatory context. |

3 | Where the Biggest Business Upside Lies

- Decision-centric Processes

Supply-chain replanning, revenue-cycle management, portfolio optimization. These tasks need models that can weigh trade-offs, run counter-factuals and output an action plan, not a paragraph. Early adopters report 3–7 pp margin gains in pilot P&Ls.businessinsider.compluto7.com - Knowledge-intensive Service Lines

Legal, audit, insurance claims, medical coding. Reasoning agents that cite sources, track uncertainty and pass structured “sanity checks” unlock 40–60 % cost take-outs while improving auditability—as long as governance guard-rails are in place.cloud.google.compatterndata.ai - Developer Productivity Platforms

Internal dev-assist, code migration, threat modelling. Firms embedding agentic reasoning into CI/CD pipelines report 20–30 % faster release cycles and reduced security regressions.timesofindia.indiatimes.com - Autonomous Planning in Operations

Factory scheduling, logistics routing, field-service dispatch. EY forecasts a shift from static optimization to agents that adapt plans as sensor data changes, citing pilot ROIs of 5× in throughput-sensitive industries.ey.com

4 | Execution Priorities for Leaders

| Priority | Action Items for 2025–26 |

|---|---|

| Set a Reasoning Maturity Target | Choose benchmarks (e.g., ARC-AGI-style puzzles for R&D, SWE-bench forks for engineering, synthetic contract suites for legal) and quantify accuracy-vs-cost goals. |

| Build Hybrid Architectures | Combine process-models (Gemini 2.5 Pro, OpenAI o-series) with symbolic verifiers, retrieval-augmented search and domain APIs; treat orchestration and evaluation as first-class code. |

| Operationalise Governance | Implement chain-of-thought logging, step-level verification, and “refusal triggers” for safety-critical contexts; align with emerging policy (e.g., EU AI Act, SB-1047). |

| Upskill Cross-Functional Talent | Pair reasoning-savvy ML engineers with domain SMEs; invest in prompt/agent design, cost engineering, and ethics training. PwC finds that 49 % of tech leaders already link AI goals to core strategy—laggards risk irrelevance.pwc.com |

Bottom Line for Practitioners

Expect the near term to revolve around process-model–plus-tool hybrids, richer context windows and automatic verifier loops. Yet ARC-AGI-2’s stubborn difficulty reminds us that statistical scaling alone will not buy true generalization: novel algorithmic ideas — perhaps tighter neuro-symbolic fusion or program search — are still required.

For you, that means interdisciplinary fluency: comfort with deep-learning engineering and classical algorithms, plus a habit of rigorous evaluation and ethical foresight. Nail those, and you’ll be well-positioned to build, audit or teach the next generation of reasoning systems.

AI reasoning is transitioning from a research aspiration to the engine room of competitive advantage. Enterprises that treat reasoning quality as a product metric, not a lab curiosity—and that embed verifiable, cost-efficient agentic workflows into their core processes—will capture out-sized economic returns while raising the bar on trust and compliance. The window to build that capability before it becomes table stakes is narrowing; the playbook above is your blueprint to move first and scale fast.

We can also be found discussing this topic on (Spotify)