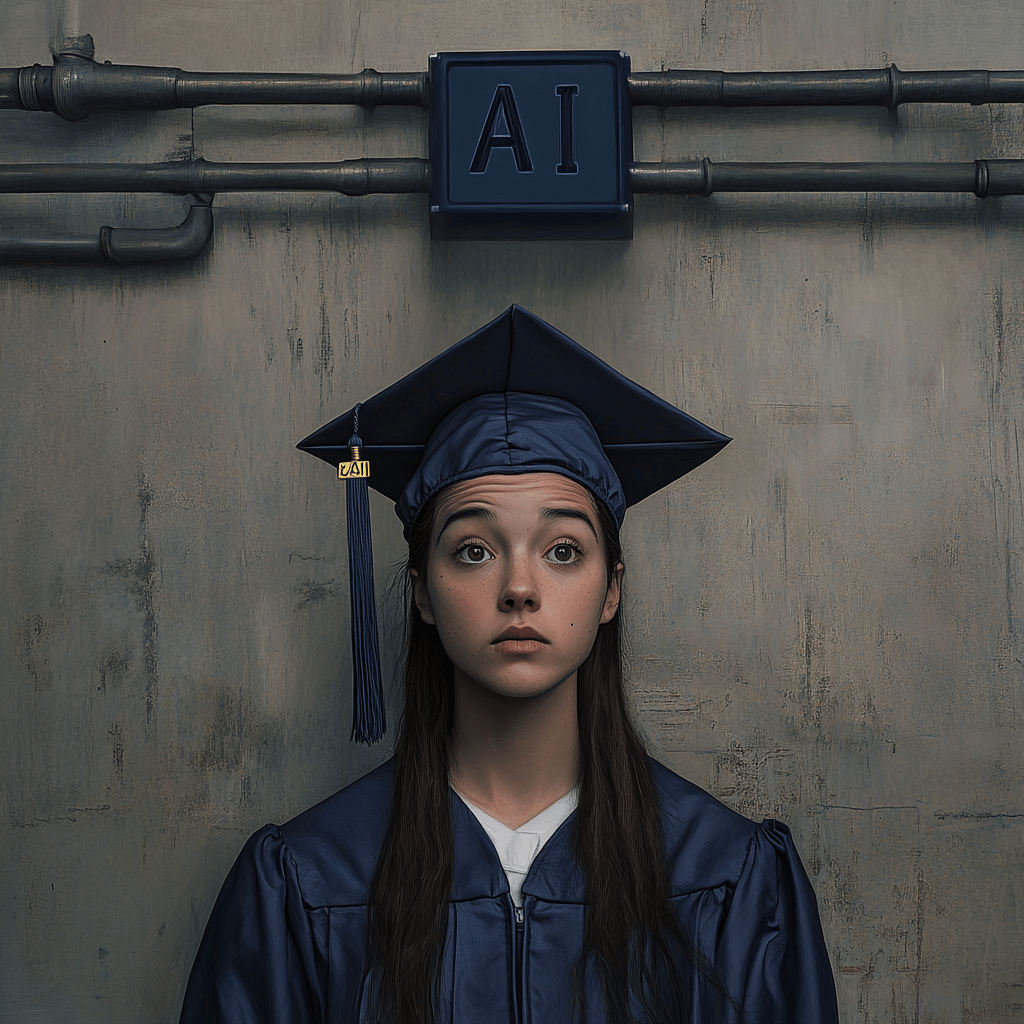

A field guide for the classes of 2025-2028

1. The Inflection Point

Artificial intelligence is no longer a distant R&D story; it is the dominant macro-force reshaping work in real time. In the latest Future of Jobs 2025 survey, 40 % of global employers say they will shrink headcount where AI can automate tasks, even as the same technologies are expected to create 11 million new roles and displace 9 million others this decade.weforum.org In short, the pie is being sliced differently—not merely made smaller.

McKinsey’s 2023 update adds a sharper edge: with generative AI acceleration, up to 30 % of the hours worked in the U.S. could be automated by 2030, pulling hardest on routine office support, customer service and food-service activities.mckinsey.com Meanwhile, the OECD finds that disruption is no longer limited to factory floors—tertiary-educated “white-collar” workers are now squarely in the blast radius.oecd.org

For the next wave of graduates, the message is simple: AI will not eliminate everyone’s job, but it will re-write every job description.

2. Roles on the Front Line of Automation Risk (2025-2028)

Why do These Roles Sit in the Automation Crosshairs

The occupations listed in this Section share four traits that make them especially vulnerable between now and 2028:

- Digital‐only inputs and outputs – The work starts and ends in software, giving AI full visibility into the task without sensors or robotics.

- High pattern density – Success depends on spotting or reproducing recurring structures (form letters, call scripts, boiler-plate code), which large language and vision models already handle with near-human accuracy.

- Low escalation threshold – When exceptions arise, they can be routed to a human supervisor; the default flow can be automated safely.

- Strong cost-to-value pressure – These are often entry-level or high-turnover positions where labor costs dominate margins, so even modest automation gains translate into rapid ROI.

| Exposure Level | Why the Risk Is High | Typical Early-Career Titles |

|---|---|---|

| Routine information processing | Large language models can draft, summarize and QA faster than junior staff | Data entry clerk, accounts-payable assistant, paralegal researcher |

| Transactional customer interaction | Generative chatbots now resolve Tier-1 queries at < ⅓ the cost of a human agent | Call-center rep, basic tech-support agent, retail bank teller |

| Template-driven content creation | AI copy- and image-generation tools produce MVP marketing assets instantly | Junior copywriter, social-media coordinator, background graphic designer |

| Repetitive programming “glue code” | Code-assistants cut keystrokes by > 50 %, commoditizing entry-level dev work | Web-front-end developer, QA script writer |

Key takeaway: AI is not eliminating entire professions overnight—it is hollowing out the routine core of jobs first. Careers anchored in predictable, rules-based tasks will see hiring freezes or shrinking ladders, while roles that layer judgment, domain context, and cross-functional collaboration on top of automation will remain resilient—and even become more valuable as they supervise the new machine workforce.

Real-World Disruption Snapshot Examples

| Domain | What Happened | Why It Matters to New Grads |

|---|---|---|

| Advertising & Marketing | WPP’s £300 million AI pivot. • WPP, the world’s largest agency holding company, now spends ~£300 m a year on data-science and generative-content pipelines (“WPP Open”) and has begun stream-lining creative headcount. • CEO Mark Read—who called AI “fundamental” to WPP’s future—announced his departure amid the shake-up, while Meta plans to let brands create whole campaigns without agencies (“you don’t need any creative… just read the results”). | Entry-level copywriters, layout artists and media-buy coordinators—classic “first rung” jobs—are being automated. Graduates eyeing brand work now need prompt-design skills, data-driven A/B testing know-how, and fluency with toolchains like Midjourney V6, Adobe Firefly, and Meta’s Advantage+ suite. theguardian.com |

| Computer Science / Software Engineering | The end of the junior-dev safety net. • CIO Magazine reports organizations “will hire fewer junior developers and interns” as GitHub Copilot-style assistants write boilerplate, tests and even small features; teams are being rebuilt around a handful of senior engineers who review AI output. • GitHub’s enterprise study shows developers finish tasks 55 % faster and report 90 % higher job satisfaction with Copilot—enough productivity lift that some firms freeze junior hiring to recoup license fees. • WIRED highlights that a full-featured coding agent now costs ≈ $120 per year—orders-of-magnitude cheaper than a new grad salary— incentivizing companies to skip “apprentice” roles altogether. | The traditional “learn on the job” progression (QA → junior dev → mid-level) is collapsing. Graduates must arrive with: 1. Tool fluency in code copilots (Copilot, CodeWhisperer, Gemini Code) and the judgement to critique AI output. 2. Domain depth (algorithms, security, infra) that AI cannot solve autonomously. 3. System-design & code-review chops—skills that keep humans “on the loop” rather than “in the loop.” cio.comlinearb.iowired.com |

Take-away for the Class of ’25-’28

- Advertising track? Pair creative instincts with data-science electives, learn multimodal prompt craft, and treat AI A/B testing as a core analytics discipline.

- Software-engineering track? Lead with architectural thinking, security, and code-quality analysis—the tasks AI still struggles with—and show an AI-augmented portfolio that proves you supervise, not just consume, generative code.

By anchoring your early career to the human-oversight layer rather than the routine-production layer, you insulate yourself from the first wave of displacement while signaling to employers that you’re already operating at the next productivity frontier.

Entry-level access is the biggest casualty: the World Economic Forum warns that these “rite-of-passage” roles are evaporating fastest, narrowing the traditional career ladder.weforum.org

3. Careers Poised to Thrive

| Momentum | What Shields These Roles | Example Titles & Growth Signals |

|---|---|---|

| Advanced AI & Data Engineering | Talent shortage + exponential demand for model design, safety & infra | Machine-learning engineer, AI risk analyst, LLM prompt architect |

| Cyber-physical & Skilled Trades | Physical dexterity plus systems thinking—hard to automate, and in deficit | Industrial electrician, HVAC technician, biomedical equipment tech ( +18 % growth )businessinsider.com |

| Healthcare & Human Services | Ageing populations + empathy-heavy tasks | Nurse practitioner, physical therapist, mental-health counsellor |

| Cybersecurity | Attack surfaces grow with every API; human judgment stays critical | Security operations analyst, cloud-security architect |

| Green & Infrastructure Projects | Policy tailwinds (IRA, CHIPS) drive field demand | Grid-modernization engineer, construction site superintendent |

| Product & Experience Strategy | Firms need “translation layers” between AI engines and customer value | AI-powered CX consultant, digital product manager |

A notable cultural shift underscores the story: 55 % of U.S. office workers now consider jumping to skilled trades for greater stability and meaning, a trend most pronounced among Gen Z.timesofindia.indiatimes.com

4. The Minimum Viable Skill-Stack for Any Degree

LinkedIn’s 2025 data shows “AI Literacy” is the fastest-growing skill across every function and predicts that 70 % of the skills in a typical job will change by 2030.linkedin.com Graduates who combine core domain knowledge with the following transversal capabilities will stay ahead of the churn:

- Prompt Engineering & Tool Fluency

- Hands-on familiarity with at least one generative AI platform (e.g., ChatGPT, Claude, Gemini)

- Ability to chain prompts, critique outputs and validate sources.

- Data Literacy & Analytics

- Competence in SQL or Python for quick analysis; interpreting dashboards; understanding data ethics.

- Systems Thinking

- Mapping processes end-to-end, spotting automation leverage points, and estimating ROI.

- Human-Centric Skills

- Conflict mitigation, storytelling, stakeholder management and ethical reasoning—four of the top ten “on-the-rise” skills per LinkedIn.linkedin.com

- Cloud & API Foundations

- Basic grasp of how micro-services, RESTful APIs and event streams knit modern stacks together.

- Learning Agility

- Comfort with micro-credentials, bootcamps and self-directed learning loops; assume a new toolchain every 18 months.

5. Degree & Credential Pathways

| Goal | Traditional Route | Rapid-Reskill Option |

|---|---|---|

| Full-stack AI developer | B.S. Computer Science + M.S. AI | 9-month applied AI bootcamp + TensorFlow cert |

| AI-augmented business analyst | B.B.A. + minor in data science | Coursera “Data Analytics” + Microsoft Fabric nanodegree |

| Healthcare tech specialist | B.S. Biomedical Engineering | 2-year A.A.S. + OEM equipment apprenticeships |

| Green-energy project lead | B.S. Mechanical/Electrical Engineering | NABCEP solar install cert + PMI “Green PM” badge |

6. Action Plan for the Class of ’25–’28

- Audit Your Curriculum

Map each course to at least one of the six skill pillars above. If gaps exist, fill them with electives or online modules. - Build an AI-First Portfolio

Whether marketing, coding or design, publish artifacts that show how you wield AI co-pilots to 10× deliverables. - Intern in Automation Hot Zones

Target firms actively deploying AI—experience with deployment is more valuable than a name-brand logo. - Network in Two Directions

- Vertical: mentors already integrating AI in your field.

- Horizontal: peers in complementary disciplines—future collaboration partners.

- Secure a “Recession-Proof” Minor

Examples: cybersecurity, project management, or HVAC technology. It hedges volatility while broadening your lens. - Co-create With the Machines

Treat AI as your baseline productivity layer; reserve human cycles for judgment, persuasion and novel synthesis.

7. Careers Likely to Fade

Just knowing what others are saying / predicting about roles before you start that potential career path – should keep the surprise to a minimum.

| Sunset Horizon | Rationale |

|---|---|

| Pure data entry & transcription | Near-perfect speech & OCR models remove manual inputs |

| Basic bookkeeping & tax prep | Generative AI-driven accounting SaaS automates compliance workflows |

| Telemarketing & scripted sales | LLM-backed voicebots deliver 24/7 outreach at fractional cost |

| Standard-resolution stock photography | Diffusion models generate bespoke imagery instantly, collapsing prices |

| Entry-level content translation | Multilingual LLMs achieve human-like fluency for mainstream languages |

Plan your trajectory around these declining demand curves.

8. Closing Advice

The AI tide is rising fastest in the shallow end of the talent pool—where routine work typically begins. Your mission is to out-swim automation by stacking uniquely human capabilities on top of technical fluency. View AI not as a competitor but as the next-gen operating system for your career.

Get in front of it, and you will ride the crest into industries that barely exist today. Wait too long, and you may find the entry ramps gone.

Remember: technology doesn’t take away jobs—people who master technology do.

Go build, iterate and stay curious. The decade belongs to those who collaborate with their algorithms.

Follow us on Spotify as we discuss these important topics (LINK)