Retrieval-Augmented Generation (RAG) has moved from a conceptual novelty to a foundational strategy in state-of-the-art AI systems. As AI models reach new performance ceilings, the hunger for real-time, context-aware, and trustworthy outputs is pushing the boundaries of what traditional large language models (LLMs) can deliver. Enter the next wave of RAG—smarter, faster, and more scalable than ever before.

This post explores the latest technological advances in RAG, what differentiates them from previous iterations, and why professionals in AI, software development, knowledge management, and enterprise architecture must pivot their attention here—immediately.

🔍 RAG 101: A Quick Refresher

At its core, Retrieval-Augmented Generation is a framework that enhances LLM outputs by grounding them in external knowledge retrieved from a corpus or database. Unlike traditional LLMs that rely solely on static training data, RAG systems perform two main steps:

- Retrieve: Use a retriever (often vector-based, semantic search) to find the most relevant documents from a knowledge base.

- Generate: Feed the retrieved content into a generator (like GPT or LLaMA) to generate a more accurate, contextually grounded response.

This reduces hallucination, increases accuracy, and enables real-time adaptation to new information.

🧠 The Latest Technological Advances in RAG (Mid–2025)

Here are the most noteworthy innovations that are shaping the current RAG landscape:

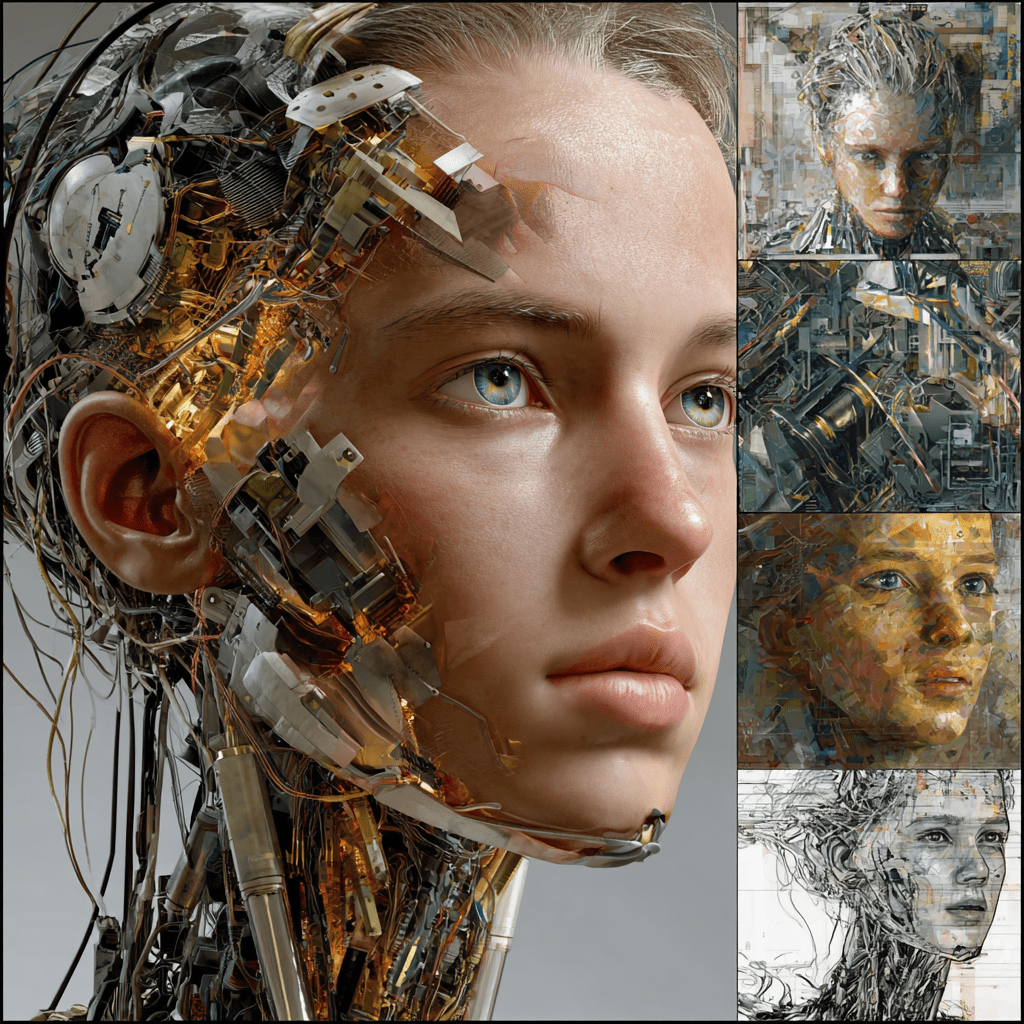

1. Multimodal RAG Pipelines

What’s new:

RAG is no longer confined to text. The latest systems integrate image, video, audio, and structured data into the retrieval step.

Example:

Meta’s multi-modal RAG implementations now allow a model to pull insights from internal design documents, videos, and GitHub code in the same pipeline—feeding all into the generator to answer complex multi-domain questions.

Why it matters:

The enterprise world is awash in heterogeneous data. Modern RAG systems can now connect dots across formats, creating systems that “think” like multidisciplinary teams.

2. Long Context + Hierarchical Memory Fusion

What’s new:

Advanced memory management with hierarchical retrieval is allowing models to retrieve from terabyte-scale corpora while maintaining high precision.

Example:

Projects like MemGPT and Cohere’s long-context transformers push token limits beyond 1 million, reducing chunking errors and improving multi-turn dialogue continuity.

Why it matters:

This makes RAG viable for deeply nested knowledge bases—legal documents, pharma trial results, enterprise wikis—where context fragmentation was previously a blocker.

3. Dynamic Indexing with Auto-Updating Pipelines

What’s new:

Next-gen RAG pipelines now include real-time indexing and feedback loops that auto-adjust relevance scores based on user interaction and model confidence.

Example:

ServiceNow, Databricks, and Snowflake are embedding dynamic RAG capabilities into their enterprise stacks—enabling on-the-fly updates as new knowledge enters the system.

Why it matters:

This removes latency between knowledge creation and AI utility. It also means RAG is no longer a static architectural feature, but a living knowledge engine.

4. RAG + Agents (Agentic RAG)

What’s new:

RAG is being embedded into agentic AI systems, where agents retrieve, reason, and recursively call sub-agents or tools based on updated context.

Example:

LangChain’s RAGChain and OpenAI’s Function Calling + Retrieval plugins allow autonomous agents to decide what to retrieve and how to structure queries before generating final outputs.

Why it matters:

We’re moving from RAG as a backend feature to RAG as an intelligent decision-making layer. This unlocks autonomous research agents, legal copilots, and dynamic strategy advisors.

5. Knowledge Compression + Intent-Aware Retrieval

What’s new:

By combining knowledge distillation and intent-driven semantic compression, systems now tailor retrievals not only by relevance, but by intent profile.

Example:

Perplexity AI’s approach to RAG tailors responses based on whether the user is looking to learn, buy, compare, or act—essentially aligning retrieval depth and scope to user goals.

Why it matters:

This narrows the gap between AI systems and personalized advisors. It also reduces cognitive overload by retrieving just enough information with minimal hallucination.

🎯 Why RAG Is Advancing Now

The acceleration in RAG development is not incidental—it’s a response to major systemic limitations:

- Hallucinations remain a critical trust barrier in LLMs.

- Enterprises demand real-time, proprietary knowledge access.

- Model training costs are skyrocketing. RAG extends utility without full retraining.

RAG bridges static intelligence (pretrained knowledge) with dynamic awareness (current, contextual, factual content). This is exactly what’s needed in customer support, scientific research, compliance workflows, and anywhere where accuracy meets nuance.

🔧 What to Focus on: Skills, Experience, Vision

Here’s where to place your bets if you’re a technologist, strategist, or AI practitioner:

📌 Technical Skills

- Vector database management: (e.g., FAISS, Pinecone, Weaviate)

- Embedding engineering: Understanding OpenAI, Cohere, and local embedding models

- Indexing strategy: Hierarchical, hybrid (dense + sparse), or semantic filtering

- Prompt engineering + chaining tools: LangChain, LlamaIndex, Haystack

- Streaming + chunking logic: Optimizing token throughput for long-context RAG

📌 Experience to Build

- Integrate RAG into existing enterprise workflows (e.g., internal document search, knowledge worker copilots)

- Run A/B tests on hallucination reduction using RAG vs. non-RAG architectures

- Develop evaluators for citation fidelity, source attribution, and grounding confidence

📌 Vision to Adopt

- Treat RAG not just as retrieval + generation, but as a full-stack knowledge transformation layer.

- Envision autonomous AI systems that self-curate their knowledge base using RAG.

- Plan for continuous learning: Pair RAG with feedback loops and RLHF (Reinforcement Learning from Human Feedback).

🔄 Why You Should Care (Now)

Anyone serious about the future of AI should view RAG as central infrastructure, not a plug-in. Whether you’re building customer-facing AI agents, knowledge management tools, or decision intelligence systems—RAG enables contextual relevance at scale.

Ignoring RAG in 2025 is like ignoring APIs in 2005: it’s a miss on the most important architecture pattern of the decade.

📌 Final Takeaway

The evolution of RAG is not merely an enhancement—it’s a paradigm shift in how AI reasons, grounds, and communicates. As systems push beyond model-centric intelligence into retrieval-augmented cognition, the distinction between knowing and finding becomes the new differentiator.

Master RAG, and you master the interface between static knowledge and real-time intelligence.